In this article, we are going to discuss why the AMD EPYC “Rome” generation will likely see 160x PCIe Gen4 lanes plus likely additional lane(s) for a necessary function. While all of our previous material focused on 128x PCIe lanes, in single and dual socket configurations for AMD EPYC, we are expecting a big change in 2019 with the new generations and one that Intel fully failed to address with its release this week. Intel instead focused on bringing out a large portfolio instead of addressing the fact that it is about to have a competitor with twice as many cores per socket and well over 3x the PCIe bandwidth in mainstream dual-socket systems.

I was going through our original AMD EPYC and Intel Xeon Scalable Architecture Ultimate Deep Dive piece from 2017, and our team went through some of the technical documentation on AMD EPYC CPUs, and we found something. Over the past few weeks, we have managed to confirm with a number of AMD’s ecosystem partners that our theory is not only valid, but it is indeed what we will see. What we know to be true about AMD EPYC’s dual socket configuration is going to drastically change with Rome. In this article, we are going to show you why.

On the first page, we are going to give you the background on the state of the server chip market today between Intel Xeon Scalable second generation lineups and the AMD EPYC first generation. On the second page, we are going to show you what will change to make AMD EPYC Rome and absolute monster for PCIe I/O.

Taking Stock of the 2019 Second Generation Intel Xeon Scalable CPUs PCIe

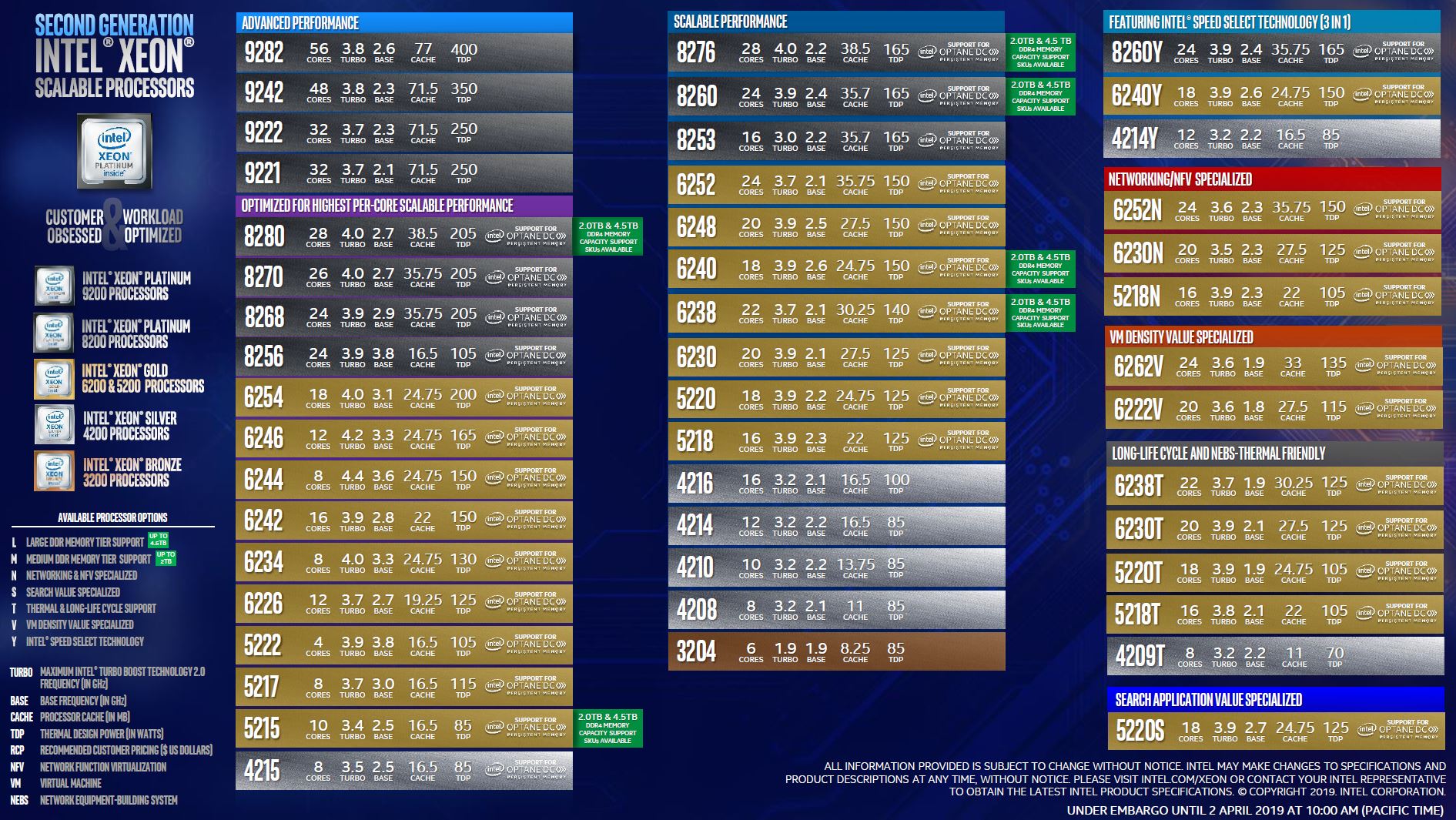

This week, we had the release of the “Cascade Lake” series of Intel Xeon Scalable processors. The second generation Intel Xeon Scalable processor family largely stuck to its roots with similar core counts as well as PCIe Gen3. The company had two mainstream products:

Second generation Intel Xeon Scalable CPUs (codenamed Cascade Lake) with model numbers in the Bronze 32xx, Silver 42xx, Gold 52xx, Gold 62xx, and Platinum 82xx series. Beyond this, there was the advanced performance or “AP” version of these chips that are now known as the Platinum 9200 series and formerly “Cascade Lake-AP.”

You can check out STH’s full 2nd Gen Intel Xeon Scalable Launch Details and Analysis including some benchmarks as well as our Second Generation Intel Xeon Scalable SKU List and Value Analysis to see how Intel changed its SKU stack to better align with competitive pressures from AMD EPYC 7001 “Naples” CPUs.

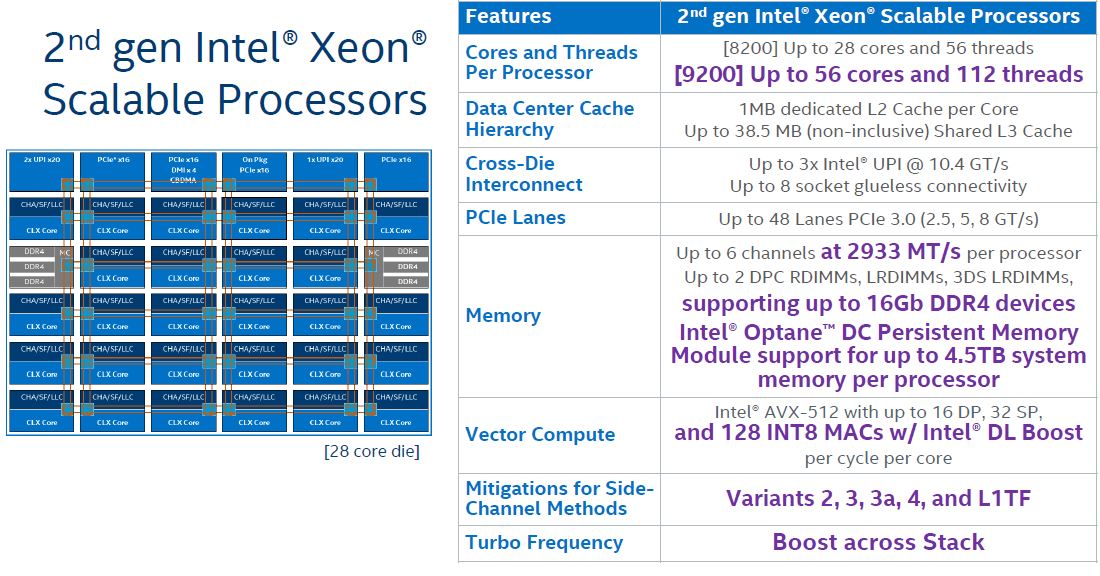

Intel still has a low power PCIe 3.0 x16 interface on the new second-generation chips, but it is limited to 48x PCIe lanes going directly from chip to device. The official Platinum 9200 series sleds top out at 80 PCIe Gen3 lanes in a dual socket system or less than we find on quad socket Xeon Scalable servers such as the Supermicro SYS-2049U-TR4 we just reviewed.

At this point, it is completely appropriate to cry foul. All of these Intel Xeon Scalable Platforms have a Lewisburg PCH. We confirmed that Intel’s Platinum 9200 series platforms also had them. The Lewisburg PCH has additional PCIe lanes connected via a DMI x4 link back to the CPU. Higher-end Lewisburg PCH options with QuickAssist accelerators and additional networking need additional CPU PCIe lanes to be routed to the PCH. Still, the SATA, networking, and PCIe lanes of Lewisburg serve an important function, keeping lower-requirement I/O off of the valuable CPU PCIe lanes. Typically, AMD EPYC has struggled here, but it seems AMD may be fixing this on some Rome systems. We are going to get to that later in this article.

Realistically, if you want high-performance PCIe 3.0 from Intel Xeon Scalable dual socket you have:

- Xeon Platinum 9200: 2 CPUs with 40x PCIe Gen3 lanes each for 80 lanes total

- Xeon Scalable Mainstream: 2 CPUs with 48x PCIe Gen3 lanes each for 96 lanes total

- Xeon Scalable 4P: 4x CPUs with 48x PCIe Gen3 lanes each for 192 lanes total

- Xeon Scalable 8P: 8x CPUs with 48x PCIe Gen3 lanes each for 384 lanes total

The Intel Xeon Scalable 8-socket servers are very popular in China because the number 8 has special significance, as does having the largest server. That topology loses a direct connection between each of the CPUs, but it can potentially provide more PCIe bandwidth than we will see with AMD EPYC Rome generations.

With the new 2nd Generation Intel Xeon Scalable CPUs covered, let us get to the current AMD EPYC 7001 “Naples” CPUs, and then to what is changing in Rome.

AMD EPYC 7001 Series “Naples” Background

To understand what changes with AMD EPYC “Rome” later this year, we need to start with the current “Naples” generation. The current generation managed to get a product shipped, but it did something more. It set market expectations as well as the socket for a platform that will refresh to much higher capacity parts in 2019.

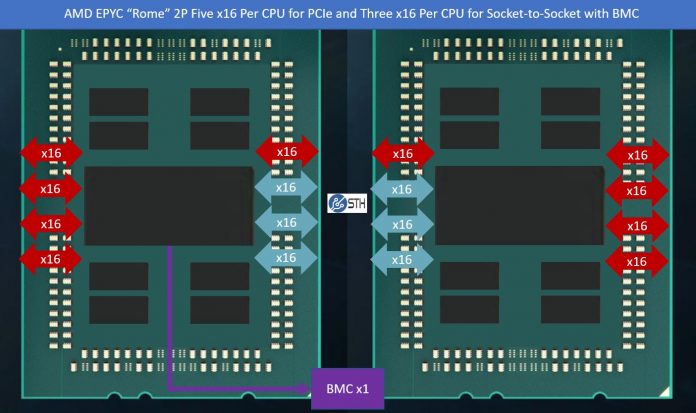

With the AMD EPYC 7001 series, there are a total of eight x16 links per CPU. In a single socket AMD EPYC 7001 server, this gives us 128x PCIe Gen3 lanes.

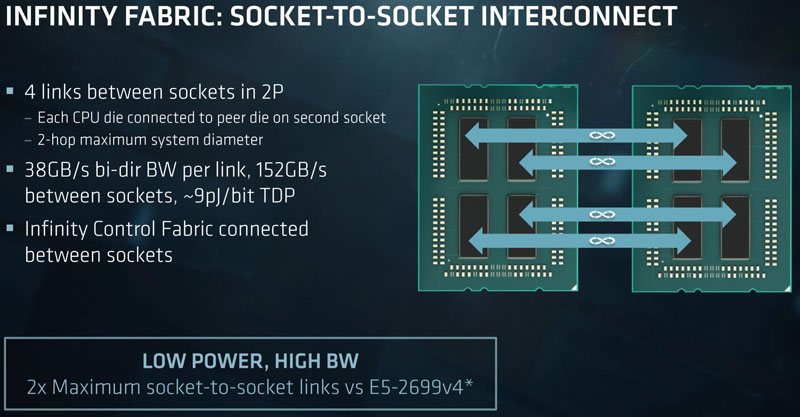

In a dual socket server, each die has a direct link to its sister die on the other socket. This uses one of the two x16 links running in Infinity Fabric mode. When I discuss the AMD EPYC 7001 series Infinity Fabric link between sockets, I usually tell people to conceptually think of it as a PCIe Gen3 x16 link between dies plus a little bit of extra juice. That is not entirely accurate, but most acquiesce that it is a decent rough conceptualization.

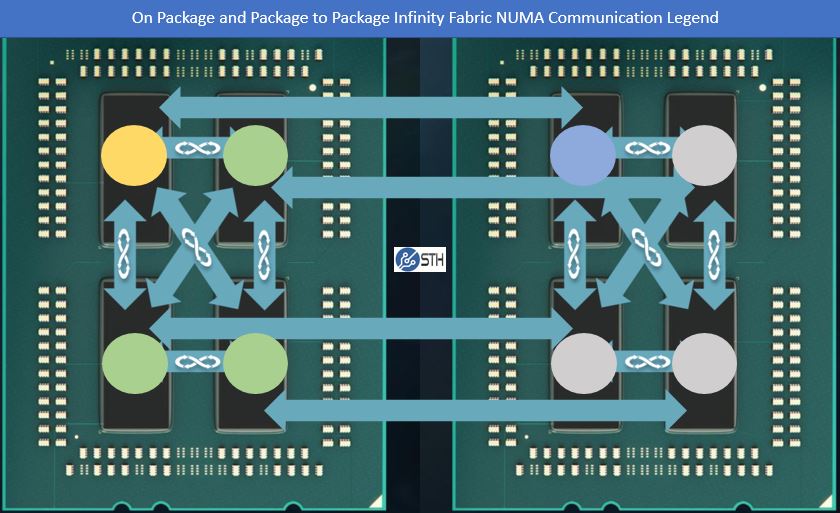

Together, the AMD EPYC 7001 “Naples” Infinity Fabric looks like this:

The die marked with yellow has on-package Infinity Fabric links to each die marked with green. It traverses the slower and longer x16 Infinity Fabric link to the die marked in blue on the other socket. It has two-hop link options to each die marked in grey.

Next we are going to show what we expect to change in the next generation, including what may be an awesome but underrated architectural improvement.