Design and code to deploy a self-hosted content delivery network.

In this blog post, I discuss the design and implementation of kubeCDN, a tool designed to simplify geo-replication of Kubernetes clusters in order to deploy services with high availability on a global scale.

More Users, More Problems

The internet has transformed how people exchange ideas and share results across the globe. Nevertheless, this medium of innovation is nowhere near perfect. Since the internet has become the first stop for most information, any hindrance in getting what we want quickly can be excruciatingly frustrating. We all know that frustration of waiting those few extra seconds (or even worse, minutes!) for a shared document or video stream to reach you. Given that we have become extremely dependent on internet-based systems for all facets of life, we end up having to stare at “buffering” animations for a larger portion of our days than we would like. This UX degradation causes users to become frustrated with services and, in the presence of competing options, pushes them to pursue other alternatives. No business wants to lose customers this way, but reducing this type of customer loss comes with several engineering challenges.

One of the main reasons for user-perceived latency in internet service delivery is that servers are located far from users. If your infrastructure is located in one part of the world and serves a global customer base, users located on the other side of the world will see increased latency when using your service. This increased latency leads to delays in data retrieval, more buffering animations and frustrated users.

Deploying servers closer to users’ locations can reduce this latency, but doing so can be challenging, as managing global infrastructure requires more capital and personnel investments.

Current Solution: Content Delivery Network (CDN) Providers

One way to overcome this challenge is to use a third-party content delivery network provider. CDN providers like Akamai, CloudFlare and Fastly allow companies to build infrastructure in one region and scale their services to a global audience.

CDN providers minimize user access time for your service by caching static content across their points of presence (PoPs) worldwide. These PoPs consist of several edge servers providing cached content to users requesting static assets like images. If a requested asset is not found, the edge server pulls the asset, either from the origin server (i.e your server), or from nearby edge servers. This setup improves the UX for geographically distributed users as they see reduced latencies and packet loss.

Read more about CDNs and how they work here.

Issues Using CDN Providers

While CDN providers offer a convenient solution to a difficult problem, there are issues associated with these services.

The first issue is that, when utilizing a third-party CDN to deliver your service, you give up some control over your infrastructure. You become reliant upon an external entity to ensure that your users get the best experience. An even more important consideration here is the security of data being transmitted from your service to users. Bugs in a third-party CDN provider’s system, such as this one from 2017, can have serious implications for the security and privacy of your users.

Another issue with a CDN provider is that they may not always have your best interests in mind. You’re likely not their only customer and, at the end of the day, the third-party CDN is a business. They’ll prioritize their profits over the quality of your service.

Finally, the most important issue with using a CDN provider is the amount of insight they gain into your business. A CDN provider can determine the locations of your customers, the times in which they use your service, and the types of devices they use to access your service. Business data like this can be very valuable to your competitor, and information leaks to a third-party can make your business vulnerable to customer loss.

It is important to keep in mind that, for many teams, these trade-offs might be worth the convenience of using a CDN provider. However, many top engineering teams like Netflix and LinkedIn have decided to handle this internally. What if you want to do the same and build your own CDN?

kubeCDN

This is where kubeCDN comes into play. kubeCDN is a self-hosted content delivery network based on Kubernetes. As a self-hosted solution, you maintain complete control over your infrastructure. It eliminates the need for a third-party service to deliver content, and restores control over the flow of data from your servers to users’ devices.

Design and Architecture

kubeCDN uses Terraform to deploy EKS and other AWS infrastructure components in a chosen region. Route53, a cloud domain name system (DNS) from AWS, is used to route users between multiple regions and ExternalDNS is used to automatically create DNS records when new services are deployed.

The image below illustrates how Terraform is used in kubeCDN.

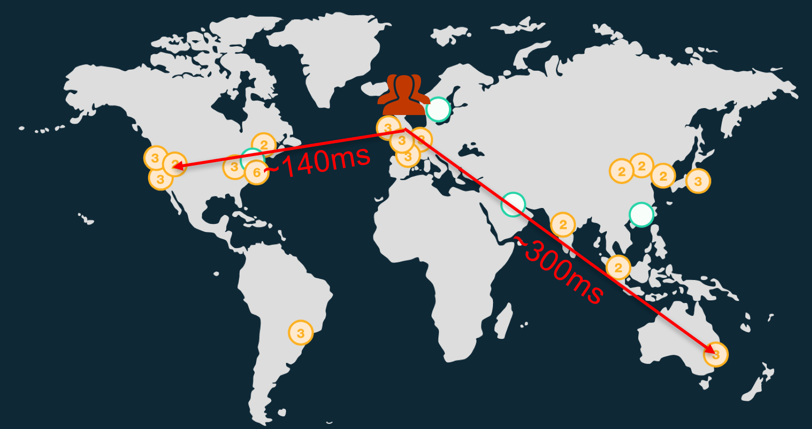

While Terraform is used to deploy the infrastructure necessary for kubeCDN, Route53 is used to route user traffic to specific regions. For my demonstration of kubeCDN shown here, I set up a video server in two AWS regions, us-east-1and us-west-2. I set up a hosted zone on Route53 for my domain and set A records for each region where I deployed clusters. I used a latency-based routing policy to route users to the region that provided them the lowest latency. In this demonstration, the user was always routed to the geographically closest region. However, please note that this may not always be the case. Latency measurements on the internet can change over time, and these are the measurements that Route53 uses to determine where to route users when this routing policy is implemented. Read more about this here.

The figure above illustrates how Route53 routes user traffic with clusters set up in the two regions mentioned earlier. The user in San Francisco is routed to the cluster in us-west-2 instead of the cluster in us-east-1 because it provided a lower latency when the demonstration was conducted.

There are several other routing policies available on Route53 that can be used with kubeCDN to accommodate various application requirements. These are shown here.

Problems Solved

kubeCDN makes it easy to scale services and applications globally in minutes. It took about 15 minutes to deploy the infrastructure needed for the demonstration mentioned above, a significant reduction compared to a manual deployment using the AWS console.

Apart from the ease of scaling, kubeCDN can also optimize infrastructure costs by tearing down regions during periods of low user activity. This level of infrastructure cost optimization can be crucial when budgets are tight to ensure maximum profitability.

Development Challenges

Like any other project, I faced a few issues developing kubeCDN. While most were minor and fairly easy to overcome, some were more challenging and required me to devise workarounds. Two such challenges are described below.

Terraform Code Refactor

The Terraform code used in kubeCDN to deploy EKS clusters to a region is based on a repository created for this webinar from HashiCorp. While the repository is very clear, with detailed instructions, it is not capable of multi-regional deployments. The target region is hard-coded and would require the repository to be replicated for each desired region. This is a tedious manual process that is vulnerable to misconfiguration due to human error. It would also lead to a haphazard management structure, making it difficult to monitor infrastructure and optimize for cost.

Refactoring the Terraform code is a way to overcome this issue, however, the challenge lies in how this refactoring would take place. After reading a relevant post and taking a look at another open-source project with similar requirements, I determined that the structure shown below would be the best way to organize the infrastructure portion of the project at this stage.

├── terraform (Dir containing all infrastructure code)

├── cluster (EKS cluster components)

│ ├── main.tf

│ ├── outputs.tf

│ ├── rbac.yaml

│ └── variables.tf

├── main.tf (Main .tf file where regions are specified)

└── variables.tf

(Some files have been removed for brevity)

In the directory structure shown above, main.tf is where all desired regions are specified. A region deployment is defined using the following snippet:

module "<name-for-region-deployment>" {

source = "cluster"

region = "<AWS-region>"

}

This enables easy definition of new regions in a single config file that is also easy to manage. I’ll discuss additional possibilities for improvement in the Extending kubeCDN section below.

ExternalDNS Issues

ExternalDNS is a tool that dynamically configures DNS records via Kubernetes resources. The tool can interface with DNS providers such as Route53 and many others. When deploying a new service to your Kubernetes cluster, such as a web server, ExternalDNS creates DNS records so your web server is discoverable using public DNS servers.

For kubeCDN, I intended to use ExternalDNS to automatically create DNS records for different regional deployments and configure Route53 to use a latency-based routing policy to route users to the region providing the lowest latency. While I was able to achieve this, I had to work around a few issues with ExternalDNS that prevented me from fully automating the dynamic configuration of DNS records for services in different regions.

I wanted to set two A records for my domain, one for each region deployed (my demonstration only used two regions). This, combined with Route53’s latency-based routing policy, would direct users to the AWS regions that provide the lowest latency. This way, I could set two A records for my domain, one pointing to my East Coast infrastructure and the other to my West Coast infrastructure. I incorporated ExternalDNS into kubeCDN and configured my video test service to use ExternalDNS and set a DNS record when deployed.

I did notice that ExternalDNS overwrites A records for the same domain name, even if the IP address is different. This seems to be a temporary limitation of the AWS provider in ExternalDNS. Given that ExternalDNS is a new tool and still incubating as a project, I decided to manually address this issue for now and will revisit it again in the future. This Github issue refers to this problem and has been closed since a pull request to fix it was submitted. However, at this point, the pull request still has not been merged with the master branch of ExternalDNS.

Another limitation of the AWS provider in ExternalDNS, as noted here (open issue on Github at the time of writing), is the inability to set latency-based routing on Route53. This was addressed by manually setting the routing policy on AWS Console.

The issues noted here are specific to the AWS provider on ExternalDNS. However, the same issues may not exist for other cloud providers on ExternalDNS or in future versions of ExternalDNS. These will be revisited in the future as the project develops and more features are added.

Extending kubeCDN

Given that kubeCDN was developed over a short period of time (three weeks), there is still room for improvement and I have several ideas to extend the project and its capabilities.

Multi-Cloud Support

At the moment, kubeCDN is solely based on AWS. This is mainly due to the fact that Fellows at Insight are given AWS credits for projects. Using only one cloud provider poses an issue in the event of provider outage. Adding support for multiple cloud providers, such as GCP & Azure, will provide necessary failover in such scenarios.

This does present a few challenges with respect to the Kubernetes aspect of kubeCDN. Currently, kubeCDN uses the EKS-managed Kubernetes service from AWS. Adding support for other cloud providers will require one of the following:

- Incorporate managed Kubernetes services from all cloud providers. While this will simplify deployment, incorporating different managed services into kubeCDN will be quite challenging.

- Use custom Kubernetes deployment. This will involve using

kopsto install a custom Kubernetes cluster using infrastructure from a chosen provider. It will allow clusters to be uniform across all cloud providers and enable increased flexibility for unique deployment scenarios. In addition to this,kopsintegration will allow teams to use kubeCDN with on-premise equipment in case all third-party dependencies need to be eliminated.

While the kops option seems to be best for this project long-term, incorporating it will be tricky and require extensive development. Even so,kops-based Kubernetes installation will have great benefits for the project in the future. Multi-region support will also enable regional failover in the event of a provider-specific outage.

Regional Auto-Scaling

While scaling infrastructure globally drastically improves UX, it may not be necessary to run infrastructure in many parts of the world on a 24/7 basis. There may be times that it’s better, from a profitability standpoint, to tear down infrastructure in a region and have the small, or negligible, number of users there experience increased latency. This level of control over infrastructure cost optimization can be extremely beneficial from a financial standpoint.

In order to achieve this, a monitoring solution would be needed to monitor a chosen metric. When this metric reaches a predefined threshold in a region, infrastructure can be dismantled. Additionally, if there is a spike in users from a specific region, similar thresholds can be used to automatically spin up infrastructure in a pre-approved region closer to where the user spike is identified.

List of Pre-defined Regions

The current version of kubeCDN has made it easy to deploy EKS clusters to new regions. However, there are better ways to achieve this than the current method of replicating three lines of code for each region. The ability to list desired regions in a file would be a much cleaner approach.

This feature would also tie in well with the regional auto-scaling feature previously mentioned. Providing kubeCDN with two lists of regions, one where infrastructure needs to run continuously and one approved for regional auto-scaling, would immensely simplify infrastructure management.

Federation of Deployed Kubernetes Clusters

Federation makes it easy to manage multiple clusters. Here is a list of the features that Federation provides. While every feature provided by Federation would be beneficial to kubeCDN, one that particularly stands out is the ability to sync resources across clusters. This poses a huge management challenge, and Federation helps simplify that immensely. However, Federation is not a mature feature of Kubernetes, and has some limitations. Here are some known issues with Federation that the Kubernetes development team is working to solve.

Takeaways

The current version of kubeCDN simplifies geo-replication of Kubernetes clusters and allows for easy scaling. Being self-hosted, kubeCDN allows for the accommodation of unique infrastructure requirements and provides immense infrastructure management flexibility.

However, there are several limitations and opportunities for enhancements that would make kubeCDN a more robust tool. Some of these have been discussed in this post, and I anticipate this list to only grow as I receive feedback on this post. Another major factor affecting future features will be the improvements coming to the Kubernetes project itself. I also anticipate the maturing of Kubernetes Federation to drastically change the functions and features of kubeCDN in the future.

I sincerely hope that you find kubeCDN to be a useful tool. Feel free to reach out to me if you have any questions.

Ilhaan Rasheed was an Insight DevOps Engineering Fellow in early 2019. He developed kubeCDN during his tenure at Insight. Connect with him at ilhaan.com.

Interested in transitioning to a career in data? Learn more about the Insight Fellows program and start your application today.