Have you ever heard a song you liked so much you wished it would last forever?

“aisatsana” is the final track off Aphex Twin’s 2012 release, Syro. A departure from the synthy dance tunes which make up the majority of Aphex Twin’s catalog, aisatsana is quiet, calm, and perfect for listening to during activities which require concentration. But with a measly running time just shy of five and a half minutes, the track isn’t nearly long enough to sustain a session of reading or coding. Playing the track on repeat isn’t satisfactory; exact repetition becomes monotonous quickly. I wished there were an hour-long version of the track, or even better, some system which could generate an endless performance of the track without repetition. Since I build software for a living, I decided to try creating such a system.

Musical Structure

Allow me to explain the relatively simple structure of aisatsana. If you’re not familiar with music theory, I’ll do my best to define the terms I’ll be using and avoid any that aren’t necessary. Try not to get hung up on the vocabulary though.

A beat is an abstract unit of time. For example, I could tell you to snap your fingers on beats one and three, and clap your hands on beats two and four, and the resulting song would go snap, clap, snap, clap. The speed at which a song is played can be measured in beats per minute, or BPM. If I told you to play that same song at 60 BPM (or one beat per second), you’d either be snapping or clapping with each tick of a clock, and it would take four seconds to play the whole song. aisatsana is played at 102 BPM.

aisatsana follows a very simple pattern. If you start counting at the first note, every 16 beats contains a sequence of notes, which I call a phrase. For example, from 3 seconds to 12 seconds contains one phrase, and from 12 seconds to 21 seconds contains another phrase. The piece continues to play a new phrase every 16 beats until the end, for a total of 32 phrases.

This is much simpler than most examples of popular music, which usually have markedly different sections like the chorus, verses, and maybe a bridge or a pre-chorus.

With this perspective, one could describe aisatsana succinctly as an algorithm:

Every 16 beats, play a 16 beat phrase

I think about this algorithm in two parts:

- Every 16 beats, do something.

- Play a 16 beat phrase.

To create a system which could play aisatsana-like music endlessly, it would need to be able to fulfill these two requirements. Part one is easy (remember, a beat is just a unit of time, so it can be read as “every X seconds, do something”). Part two is a little trickier.

An Algorithm For Writing Music

One totally valid strategy for making aisatsana last forever would be to separate the 32 phrases and write a program to select and play a phrase every 16 beats. The result would probably be nicely varied, and no doubt enjoyable to listen to for longer than simply playing the original track over and over. However, I feel this approach would still be too repetitive. My brain would learn to recognize all 32 phrases and the output of such a system would become boring.

It was important to me that the system could create and play new phrases in addition to the original ones. The challenge was to generate new phrases which sounded similar to the originals; the system shouldn’t just play random notes for 16 beats. Ideally, someone who’d never heard aisatsana before could listen to my system without knowing which phrases were new and which were from the original track.

I began researching methods for generating new music which was similar to some input. Naturally, I found many solutions which involved deep learning techniques. However, with a paltry sample size of 32 phrases, I was worried these techniques would require significantly more input than I had to offer. Instead, I decided to try an older technique which used Markov Chains.

You can learn all about Markov chains with a quick internet search, but I’ll try to explain it with an example. A Markov chain records a set of possible states and the probabilities of transitioning from one state to another. Let’s pretend in your whole life you only ever go to three places: your home, your workplace, and the grocery store. In this sad existence of yours there are three states: either you’re at home (state one), or you’re at your workplace (state two), or you’re at the grocery store (state three).

If I were creepy, I could follow you around for some time and record where you go. Eventually, I could analyze my data to determine the probability of where you’ll go next based on where you currently are. For example, perhaps I’ve observed that when you’re at home there’s an 80% chance the next place you’ll go is your workplace, and a 20% the next place you’ll go is the grocery store. When you’re at work, there’s a 50% chance you’ll next go to the grocery store and a 50% chance you’ll go home. Finally, when you’re at the grocery store, there’s a 95% chance you’ll go home next and only a 5% chance you’ll go to work next.

This is all that’s needed to create a Markov chain: states, and the probabilities of transitioning from each state to the others. I’ll apply this to music with a simple example.

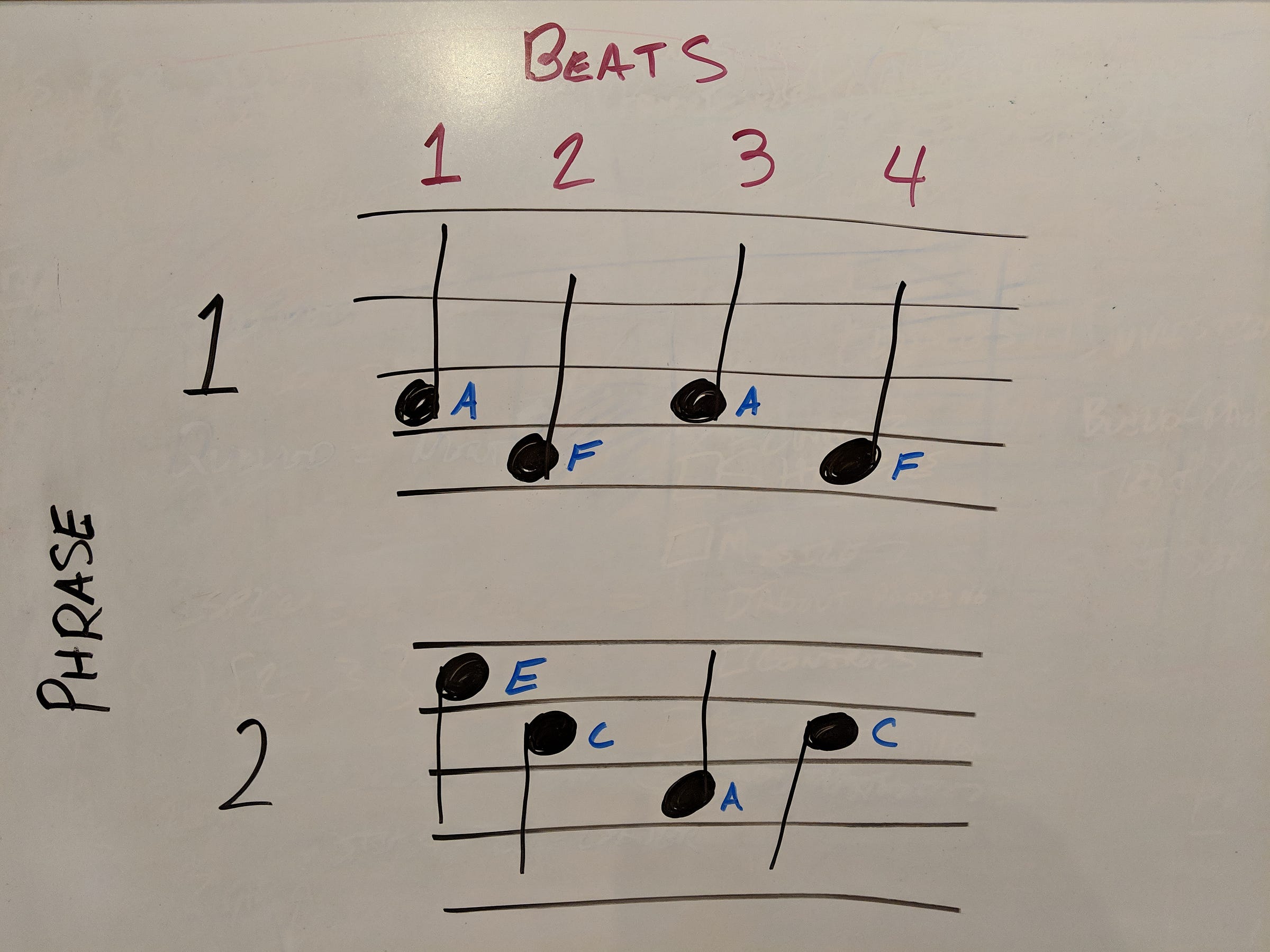

Below I’ve drawn out two four-beat phrases in standard musical notation. I’ve also written the note names next to each note and the beat count at the top in case you don’t read music. So to play phrase one, you’d play an A on beat one, an F on beat two, an A again on beat three, and an F again on beat four. To play phrase two, you’d play an E on beat one, a C on beat two, an A on beat three, and a C on beat four. Easy!

Let’s build a Markov chain to describe these two phrases. For the state names, I’ll use a combination of the beat and the note, like 1A to describe an A played on beat one. I’m also going to add a start and an end state to denote the beginning and end of a phrase. I’ll use an arrow (→) to denote a transition from one state to another.

In this notation, phrase one is start→1A→2F→3A→4F→end and phrase two is start→1E→2C→3A→4C→end. There are 9 states: start, 1A, 1E, 2F, 2C, 3A, 4F, 4C, and end, and here are the probabilities for each transition:

start→1A: 50%

start→1E: 50%

1A→2F: 100%

1E→2C: 100%

2F→3A: 100%

2C→3A: 100%

3A→4F: 50%

3A→4C: 50%

4F→end: 100%

4C→end: 100%

With this Markov chain, we can generate phrases by beginning at start and following the transitions until we reach end. This is called walking the chain.

Here’s an algorithm for walking this Markov chain:

- Begin at start.

- Record the current state. Select one of the transitions from the current state based on the probability of each transition, and follow that transition to the next state.

- Repeat step 2 until you’ve reached end.

Let’s generate a phrase. Beginning at start, there’s a 50% chance of transitioning to 1A and a 50% chance of transitioning to 1E. I’ll flip a coin; heads, we go to 1A, tails, we go to 1E. Golly, it landed heads, so now we’re at 1A. So far the phrase we’re generating looks like start→1A.

1A always transitions to 2F, so now we have start→1A→2F. Again, we can see that 2F always transitions to 3A, which puts us at start→1A→2F→3A. Here’s where things get interesting.

3A has a 50% chance of transitioning to 4F and a 50% chance of transitioning to 4C. I could flip another coin to determine which state we select; let’s say heads means we go to 4F and tails means we go to 4C. If I flip a coin and it lands on heads, we’ll have generated a copy of the first original phrase (start→1A→2F→3A→4F→end). But if the coin lands on tails, we’ll have generated a new phrase: start→1A→2F→3A→4C→end!

If we take every possible path through our Markov chain, we’ll see it’s capable of generating four phrases:

start→1A→2F→3A→4F→end (original phrase one)

start→1A→2F→3A→4C→end (new phrase)

start→1E→2C→3A→4C→end (original phrase two)

start→1E→2C→3A→4F→end (new phrase)

Look at that! The Markov chain helped us generate two new phrases. If we played all four phrases in a row to someone who had never heard any of them, that person probably wouldn’t be able to tell which ones were original and which were new. The phrases sound similar to each other because they were all built from the same Markov chain.

This strategy can be applied to aisatsana as well with a much larger Markov chain. This small example only has two phrases, and each only contains four beats. aisatsana has 32 phrases, each made up of 16 beats. Even though there will be more states and transitions, you can go through the exact same process of building and walking a Markov chain to generate phrases both new and original.

Building the Chain

The first thing I needed was a digital representation of aisatsana to work with. While our little example above was easy to calculate and walk by hand, doing the same with aisatsana would take a very long time. Note that an audio file wouldn’t work; I needed the actual instructions for playing the piece, like which notes are played and when. This is precisely what MIDI files are for, and the very nice folks who run the MIDM Database have a MIDI file for aisatsana!

I wanted my system to run in the browser, so I decided to build my system in JavaScript. While one could parse a MIDI file with JavaScript, I preferred to work with something in a JSON format, and found a tool online to make the conversion for me. You can view the JSON output from the conversion here.

If you examine the JSON file above, you’ll see a property named tracks which is an array containing three objects, one of which has a non-empty property notes . notes is an array of objects, each of which includes a note name and the time in seconds at which the note is to be played. This is exactly the information needed to build a Markov chain!

Quick NOTE (heh…): the note names in the JSON linked above are all a letter followed by a number, like “G3.” It’s not important that you understand what the number means, just understand that a letter followed by a number indicates a more specific note than a letter by itself. If you’re curious, the number indicates the octave in which the note is played.

At this point, I must confess that there’s a small difference between aisatsana and the phrases I used in the example above which affected how I built the Markov chain. In my example phrases, every note occurred on one of the beats. However, this isn’t the case in aisatsana. Some of the notes occur halfway between two beats. There’s two ways one could adjust the chain-building strategy to account for this:

- Treat aisatsana as if it were played at 204 BPM, which is double the actual speed. This would mean each phrase is made of 32 beats instead of 16.

- Count “half-beats” instead of beats.

The implementations are identical, but I’ll continue writing from the perspective of the latter approach.

To build the Markov chain, I needed to know which half-beat each note is played on. The output of the MIDI file unfortunately doesn’t indicate this information, but it does include the time of each note in seconds. Since we know the speed of the track is 102 BPM (beats per minute, remember?), we can easily calculate the length of each half-beat. To do this, simply convert 102 BPM to seconds per half-beat. First, change 102 BPM to half-beats per minute:

102 beats per minute multiplied by 2 half-beats per beat equals 204 half-beats per minute

Then, convert it to seconds per half-beat:

60 seconds per minute divided by 204 half-beats per minute equals about 0.29 seconds per half-beat

Now it’s easy to determine which half-beat each note occurs on. For example, any note played between 0 and 0.29 seconds can be attributed to the first half-beat. Notes played between 0.29 and 0.58 seconds are attributed to the second half-beat, and so on. Just iterate through the array of notes to determine which half-beat each note occurs on. In music production this process is called quantization.

Once the notes were quantized, I separated them into the aforementioned 32 phrases. Since each phrase is made of 32 half-beats (or 16 beats), this is a trivial process. All that was left to do was create the Markov chain.

In my implementation (which I’ll link below), to denote the states I used a combination of the half-beat number relative to the phrase and the notes played during that half-beat. For example, 1,C4,A4 indicates a C4 and an A4 played during half-beat 1 of the phrase. If no notes were played during the half-beat, I simply used the half-beat number, like 3. Each phrase includes 32 states, all starting with a number between 1 and 32. If you recall the notation I used earlier, a phrase of aisatsana might be represented like start→1,C4,A4→2→3,G5→4→…(and so on)…→30→31,C4→32→end.

With all of the phrases converted into state names, I was ready to calculate the transition probabilities. While many software developers abide by the DRY principle (“Don’t Repeat Yourself”), I feel it’s just as important to adhere to DRO (“Don’t Repeat Others”) when possible. I found a library on npm appropriately named “markov-chains” which could take my phrases as input and also provided a .walk() function which returns a list of states created by walking the chain. This enabled my system to generate new and original phrases of aisatsana.

I found a collection free piano samples in the wonderful Community Edition of Versilian Studios Chamber Orchestra 2, and I could play them through the Web Audio API with the equally amazing Tone.js. Thus, the “play a 16 beat phrase” requirement of the algorithm I mentioned at the beginning of this article was fulfilled, and my dream system was complete.

Generative Music

You can listen to my implementation on generative.fm as long as you’re using a browser which supports the Web Audio API, which you probably are. On the site, select “aisatsana (generative remix),” and then press play. If you’d like to inspect the code for yourself, you can find it in a Github repository here.

I am incredibly pleased with the result. All of the phrases sound like they belong in the same piece, and the playback is sufficiently random so as not to be tiresome. In fact, the system is capable of generating over four million unique phrases, which is enough to play for over 451 days without playing the same phrase twice. I often listen to the output from this system for several hours at a time, especially when I’m coding, reading, or writing (I’m listening to it as I type this).

An interesting transformation took place during this process. The original piece of music is static. It has a beginning and an end, and everything in between is specified in a particular order. Every time you listen to the recording or hear it performed live, the same piece of music is played, even if it’s performed remotely on a grand piano swinging across a stage like a pendulum.

Through the process outlined in this article, a system has been created which generates new music on demand. This system will play for as long as you’re willing to listen, but never repeat itself in any discernible way. It will invent a new sequence of phrases to play every time it starts. In other words, you’ll never hear it play the same thing twice. I don’t feel this is better or worse than the original, but it’s profoundly different.

Creating systems to generate music is not new. Brian Eno created several such systems and coined the term “generative music” to describe their output. He was inspired by composers like Steve Reich, who had also experimented with generative music systems. You can find an unbelievably fantastic overview of generative music by Tero Parviainen at teropa.info/loop.

It’s truly incredible that we can create unique, complex musical output from simple systems which run on your smartphone’s internet browser. With the accessibility of technologies like the Web Audio API and deep learning libraries, I look forward to a future full of amazing musical systems unbound by the limitations of traditionally written and recorded music.