In 2012, computer scientist Dharmendra Modha used a powerful supercomputer to simulate the activity of more than 500 billion neurons—more, even, than the 85 billion or so neurons in the human brain. It was the culmination of almost a decade of work, as Modha progressed from simulating the brains of rodents and cats to something on the scale of humans.

The simulation consumed enormous computational resources—1.5 million processors and 1.5 petabytes (1.5 million gigabytes) of memory—and was still agonizingly slow, 1,500 times slower than the brain computes. Modha estimates that to run it in biological real time would have required 12 gigawatts of energy, about six times the maximum output capacity of the Hoover Dam. “And yet, it was just a cartoon of what the brain does,” says Modha, chief scientist for brain-inspired computing at IBM Almaden Research Center in northern California. The simulation came nowhere close to replicating the functionality of the human brain, which uses about the same amount of power as a 20-watt lightbulb.

Since the early 2000s, improved hardware and advances in experimental and theoretical neuroscience have enabled researchers to create ever larger and more-detailed models of the brain. But the more complex these simulations get, the more they run into the limitations of conventional computer hardware, as illustrated by Modha’s power-hungry model.

It’s one of the most ambitious technological puzzles that we can take on—reverse engineering the brain.

—Mike Davies, Intel

Modha’s human brain simulations were run on Lawrence Livermore National Lab’s Blue Gene/Q Sequoia supercomputer, an immensely powerful conglomeration of traditional computer hardware: it’s powered by a whole lot of conventional computer chips, fingernail-size wafers of silicon containing millions of transistors. The rules that govern the structure and function of traditional computer chips are quite different from that of our brains.

But the fact that computers “think” very differently than our brains do actually gives them an advantage when it comes to tasks like number crunching, while making them decidedly primitive in other areas, such as understanding human speech or learning from experience. If scientists want to simulate a brain that can match human intelligence, let alone eclipse it, they may have to start with better building blocks—computer chips inspired by our brains.

So-called neuromorphic chips replicate the architecture of the brain—that is, they talk to each other using “neuronal spikes” akin to a neuron’s action potential. This spiking behavior allows the chips to consume very little power and remain power-efficient even when tiled together into very large-scale systems.

“The biggest advantage in my mind is scalability,” says Chris Eliasmith, a theoretical neuroscientist at the University of Waterloo in Ontario. In his book How to Build a Brain, Eliasmith describes a large-scale model of the functioning brain that he created and named Spaun.1 When Eliasmith ran Spaun’s initial version, with 2.5 million “neurons,” it ran 20 times slower than biological neurons even when the model was run on the best conventional chips. “Every time we add a couple of million neurons, it gets that much slower,” he says. When Eliasmith ran some of his simulations on digital neuromorphic hardware, he found that they were not only much faster but also about 50 times more power-efficient. Even better, the neuromorphic platform became more efficient as Eliasmith simulated more neurons. That’s one of the ways in which neuromorphic chips aim to replicate nature, where brains seem to increase in power and efficiency as they scale up from, say, 300 neurons in a worm brain to the 85 billion or so of the human brain.

Neuromorphic chips’ ability to perform complex computational tasks while consuming very little power has caught the attention of the tech industry. The potential commercial applications of neuromorphic chips include power-efficient supercomputers, low-power sensors, and self-learning robots. But biologists have a different application in mind: building a fully functioning replica of the human brain.

Power GridNeuromorphic hardware takes a page from the architecture of animal nervous systems, relaying signals via spiking that is akin to the action potentials of biological neurons. This feature allows the hardware to consume far less power and run brain simulations orders of magnitude faster than conventional chips. |

Many of today’s neuromorphic systems, from chips developed by IBM and Intel to two chips created as part of the European Union’s Human Brain Project, are also available to researchers, who can remotely access them to run their simulations. Researchers are using these chips to create detailed models of individual neurons and synapses, and to decipher how units come together to create larger brain subsystems. The chips allow neuroscientists to test theories of how vision, hearing, and olfaction work on actual hardware, rather than just in software. The latest neuromorphic systems are also enabling researchers to begin the far more challenging task of replicating how humans think and learn.

It’s still early days, and truly unlocking the potential of neuromorphic chips will take the combined efforts of theoretical, experimental, and computational neuroscientists, as well as computer scientists and engineers. But the end goal is a grand one—nothing less than figuring out how the components of the brain work together to create thoughts, feelings, and even consciousness.

“It’s one of the most ambitious technological puzzles that we can take on—reverse engineering the brain,” says computer engineer Mike Davies, director of Intel’s neuromorphic computing lab.

It’s all about architecture

Caltech scientist Carver Mead coined the term “neuromorphic” in the 1980s, after noticing that, unlike the digital transistors that are the building blocks of modern computer chips, analog transistors more closely mirror the biophysics of neurons. Specifically, very tiny currents in an analog circuit—so tiny that the circuit was effectively “off”—exhibited dynamics similar to the flow of ions through channels in biological neurons when that flow doesn’t lead to an action potential.

Intrigued by the work of Mead and his colleagues, Giacomo Indiveri decided to do his postdoctoral research at Caltech in the mid-1990s. Now a neuromorphic engineer at the University of Zurich in Switzerland, Indiveri runs one of the few research groups continuing Mead’s approach of using low-current analog circuits. Indiveri and his team design the chips’ layout by hand, a process that can take several months. “It’s pencil and paper work, as we try to come up with elegant solutions to implementing neural dynamics,” he says. “If you’re doing analog, it’s still very much an art.”

If you’re doing analog, it’s still very much an art.

—Giacomo Indiveri, University of Zurich

Once they finalize the layout, they email the design to a foundry—one of the same precision metal-casting factories that manufacture chips used in smartphones and computers. The end result looks roughly like a smartphone chip, but it functions like a network of “neurons” that propagate spikes of electricity through several nodes. In these analog neuromorphic chips, signals are relayed via actual voltage spikes that can vary in their intensity. As in the brain, information is conveyed through the timing of the spikes from different neurons.

“If you show the output of one of these neurons to a neurophysiologist, he will not be able to tell you whether it’s coming from a silicon neuron or from a biological neuron,” says Indiveri.

These silicon neurons represent an imperfect attempt to replicate the nervous system’s organic wetware. Biological neurons are mixed analog-digital systems; their action potentials mimic the discrete pulses of digital hardware, but they are also analog in that the voltage levels in a neuron influence the information being transmitted.

Analog neuromorphic chips feature silicon neurons that closely resemble the physical behavior of biological neurons, but their analog nature also makes the signals they transmit less precise. While our brains have evolved to compensate for their imprecise components, researchers have instead taken the basic concept into the digital realm. Companies such as IBM and Intel have focused on digital neuromorphic chips, whose silicon neurons replicate the way information flows in biological neurons but with different physics, for the same reason that conventional digital chips have taken over the vast majority of our computers and electronics—their greater reliability and ease of manufacture.

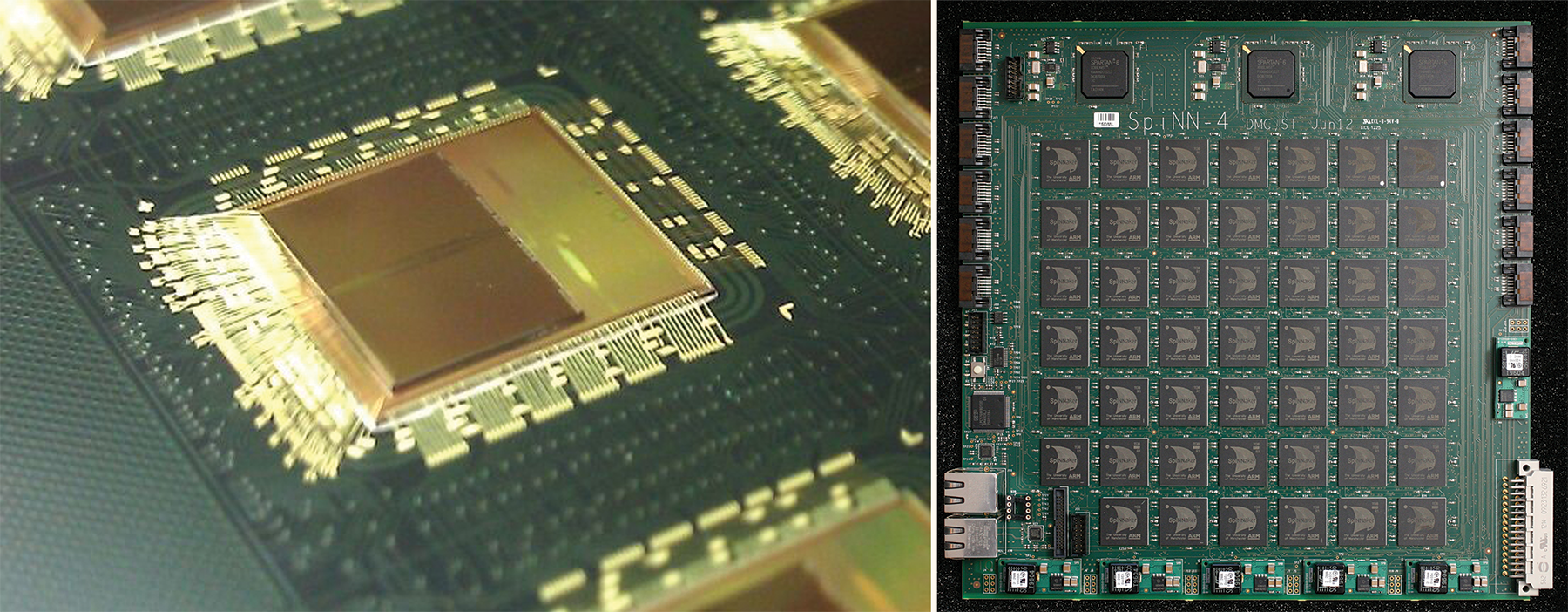

PHOTOs COURTESY UNISEM EUROPE LTD

BUILDING BLOCKS: Each SpiNNaker chip is packaged together with memory (top left), then tiled together into larger devices, such as the 48-node board on the top right. Multiple boards can be connected together to form larger SpiNNaker systems (above).

UNIVERSITY OF MANCHESTER, BY STEVE FURBER AND COLLEAGUES

But these digital chips maintain their neuromorphic status in the way they capture the brain’s architecture. In these digital neuromorphic chips, the spikes come in the form of packets of information rather than actual pulses of voltage changes. “That’s just dramatically different from anything we conventionally design in computers,” says Intel’s Davies.

Whatever form the spikes take, the system only relays the message when inputs reach a certain threshold, allowing neuromorphic chips to sip rather than guzzle power. This is similar to the way the brain’s neurons communicate whenever they’re ready rather than at the behest of a timekeeper. Conventional chips, on the other hand, are mostly linear, shuttling data between memory hardware, where data are stored, and a processor, where data are computed, under the control of a strict internal clock.

When Modha was designing IBM’s neuromorphic chip, called TrueNorth, he first analyzed long-distance wiring diagrams of the brain, which map how different brain regions connect to each other, in macaques and humans.2 “It really began to tell us about the long-distance connectivity, the short-distance connectivity, and about the neuron and synapse dynamics,” he says. By 2011, Modha created a chip that contained 256 silicon neurons, the same scale as the brain of the worm C. elegans. Using the latest chip fabrication techniques, Modha packed in the neurons more tightly to shrink the chip, and tiling 4,096 of these chips together resulted in the 2014 release of TrueNorth, which contains 1 million synthetic neurons—about the scale of a honeybee brain—and consumes a few hundred times less power than conventional chips.3

Neuromorphic chips such as TrueNorth have a very high degree of connectivity between their artificial neurons, similar to what is seen in the mammalian brain. The massively parallel human brain’s 85 billion neurons are highly interconnected via approximately 1 quadrillion synapses.

TrueNorth is quite a bit simpler—containing 256 million “synapses” connecting its 1 million neurons—but by tiling together multiple TrueNorth chips, Modha has created two larger systems: one that simulates 16 million neurons and 4 billion synapses, and another with 64 million neurons and 16 billion synapses. More than 200 researchers at various institutions currently have access to TrueNorth for free.

In addition to the highly interconnected and spiking qualities of neuromorphic chips, they copy another feature of biological nervous systems: unlike traditional computer chips, which keep their processors and memory in separate locations, neuromorphic chips tend to have lots of tiny processors, each with small amounts of local memory. This configuration is similar to the organization of the human brain, where neurons simultaneously handle both data storage and processing. Researchers think that this element of neuromorphic architecture could enable models built with these chips to better replicate human learning and memory.

Learning ability was a focus of Intel’s Loihi chip, first announced in September 2017 and shared with researchers in January of last year.4 Designed to simulate about 130,000 neurons and 130 million synapses, Loihi incorporates models of spike-timing-dependent plasticity (STDP), a mechanism by which synaptic strength is mediated in the brain by the relative timing of pre- and post-synaptic spikes. One neuron strengthens its connection to a second neuron if it fires just before the second neuron, whereas the connection strength is weakened if the firing order is reversed. These changes in synaptic strength are thought to play an important role in learning and memory in human brains.

Davies, who led the development of Loihi, says the intention is to capture the rapid, life-long learning that our brains are so good at and current artificial intelligence models are not. Like TrueNorth, Loihi is being distributed to various researchers. As more groups use these chips to model the brain, Davies says, “hopefully some of the broader principles of what’s going on that explain some of the amazing capability we see in the brain might become clear.”

Neuromorphics for neuroscience

For all their potential scientific applications, TrueNorth and Loihi aren’t built exclusively for neuroscientists. They’re primarily research chips aimed at testing and optimizing neuromorphic architecture to increase its capabilities and ease of use, as well as exploring various potential commercial applications. Those range from voice and gesture recognition to power-efficient robotics and on-device machine learning models that could power smarter smartphones and self-driving cars. The EU’s Human Brain Project, on the other hand, has developed two neuromorphic hardware systems with the explicit goal of understanding the brain.

BrainScaleS, launched in 2016,5 combines many chips on large wafers of silicon—more like ultra-thin frisbees than fingernails. Each wafer contains 384 analog chips, which operate rather like souped-up versions of Indiveri’s analog chips, optimized for speed rather than low power consumption. Together they simulate about 200,000 neurons and 49 million synapses per wafer.

Building a functional model of the brainNeuromorphic technology is powering ever bigger and more-complex brain models, which had begun to reach their limits with modern supercomputing. Spaun is one example. The 2.5 million–neuron model recapitulates the structure and functions of several features of the human brain to perform a variety of cognitive tasks. Much like humans, it can more easily remember a short sequence of numbers than a long sequence, and is better at remembering the first few and last few numbers than the middle numbers. While researchers have run parts of the current Spaun model on conventional hardware, neuromorphic chips will be crucial for efficiently executing larger, more-complicated versions now in development. When shown a series of numbers (1), Spaun’s visual system compresses and encodes the image of each number as a pattern of signals akin to the firing pattern of biological neurons. Information about the content of each image—the concept of the number—is then encoded as another spiking pattern (2), before it is compressed and stored in working memory along with information about the number’s position in the sequence (3). This process mimics the analysis and encoding of visual input in the visual and temporal cortices and storage in the parietal and prefrontal cortices. When asked to recall the sequence sometime later, the model’s information decoding area decompresses each number, in turn, from the stored list (4), and the motor processing system maps the resulting concept to a motor command firing pattern (5). Finally, the motor system translates the motor command for a computer simulation of a physical arm to draw each number (6). This is akin to human recall, where the parietal cortex draws memories from storage areas of the brain such as the prefrontal cortex and translates them into behavior in the motor area. |

BrainScaleS, along with the EU’s other neuromorphic system, called SpiNNaker, benefits from being part of the Human Brain Project’s large community of theoretical, experimental, and computational neuroscientists. Interactions with this community guide the addition of new features that might help scientists, and allow new discoveries from both systems to quickly ripple back into the field.

Steve Furber, a computer engineer at the University of Manchester in the UK, conceived of SpiNNaker 20 years ago, and he’s been designing it for more than a decade. After toiling away at the small digital chips underlying SpiNNaker for about six years, Furber says, he and his colleagues achieved full functionality in 2011. Ever since, the research team has been assembling the chips into machines of ever-increasing size, culminating in the million-processor machine that was switched on in late 2018.6 Furber expects that SpiNNaker should be able to model the 100 million neurons in a mouse brain in real time—something conventional supercomputers would do about a thousand times slower.

Access to the EU Human Brain Project systems is currently free to academic labs. Neuroscientists are starting to run their own programs on the SpiNNaker hardware to simulate high-level processing in specific subsystems of the brain, such as the cerebellum, the cortex, or the basal ganglia. For example, researchers are trying to simulate a small repeating structural unit—the cortical microcolumn—found in the outer layer of the brain responsible for most higher-level functions. “The microcolumn is small, but it still has 80,000 neurons and a quarter of a billion synapses, so it’s not a trivial thing to model,” says Furber.

Next, he adds, “we’re beginning to sort of think system-level as opposed to just individual brain regions,” inching closer to a full-scale model of the 85 billion–neuron organ that powers human intelligence.

Mimicking the brain

Modeling the brain using neuromorphic hardware could reveal the basic principles of neuronal computation, says Richard Granger, a computational neuroscientist at Dartmouth College. Neuroscientists can measure the biophysical and chemical properties of neurons in great detail, but it’s hard to know which of these properties are actually important for the brain’s computational abilities. Although the materials used in neuromorphic chips are nothing like the cellular matter of human brains, models using this new hardware could reveal computational principles for how the brain shuttles and evaluates information.

Replicating simple neural circuits in silicon has helped Indiveri discover hidden benefits of the brain’s design. He once gave a PhD student a neuromorphic chip that had the ability to model spike frequency adaptation, a mechanism that allows humans to habituate to a constant stimulus. Crunched for space on the chip, the student decided not to implement this feature. However, as he worked to lower the chip’s bandwidth and power requirements, he ended up with something that looked identical to the spike frequency adaptation that he had removed. Indiveri and his colleagues have also discovered that the best way to send analog signals over long distances is to represent them not as a continuously variable stream, for example, but as a sequence, or train, of spikes, just like neurons do. “What’s used by neurons turns out to be the optimal technique to transmit signals if you want to minimize power and bandwidth,” Indiveri says.

Neuromorphic hardware can also allow researchers to test their theories about brain functioning. Cornell University computational neuroscientist Thomas Cleland builds models of the olfactory bulb to elucidate the principles underpinning our sense of smell. Using the Loihi chip enabled him to build hardware models that were fast enough to mimic biology. When given data from chemosensors—serving as artificial versions of our scent receptors—the system learned to recognize odors after being exposed to just one sample, outperforming traditional machine learning approaches and getting closer to humans’ superior sniffer.

“By successfully mapping something like that and actually showing it working in a neuromorphic chip, it’s a great confirmation that you really do understand the system,” says Davies.

Cleland’s olfaction models didn’t always work out as expected, but those “failed” experiments were just as revealing. The odor inputs sometimes looked different to the sensors than the model predicted, possibly because the odors were more complex or noisier than expected, or because temperature or humidity interfered with the sensors. “The input gets kind of wonky, and we know that that doesn’t fool our nose,” he says. The researchers discovered that by paying attention to previously overlooked “noise” in the odor inputs, the olfactory system model could correctly detect a wider variety of inputs. The results led Cleland to update his model of olfaction, and researchers can now look at biological systems to see if they use this previously unknown technique to recognize complex or noisy odors.

Cleland is hoping to scale up his model, which runs in biological real time, to analyze odor data from hundreds or even thousands of sensors, something that could take days to run on non-neuromorphic hardware. “As long as we can put the algorithms onto the neuromorphic chip, then it scales beautifully,” he says. “The most exciting thing for me is being able to run these 16,000-sensor datasets to see how good the algorithm is going to get when we do scale up.”

SpiNNaker, TrueNorth, and Loihi can all run simulations of neurons and the brain at the same speed at which they occur in biology, meaning researchers can use these chips to recognize stimuli—such as images, gestures, or voices—as they occur, and process and respond to them immediately. Apart from allowing Cleland’s artificial nose to process odors, these capabilities could enable robots to sense and react to their environment in real time while consuming very little power. That’s a huge step up from most conventional computers.

For some applications, such as modeling learning processes that can take weeks, months, or even years, it helps to be a bit faster. That’s where BrainScaleS comes in, with its ability to operate at about 1,000–10,000 times faster than biological brains. And the system is only getting more advanced. It’s in the process of being upgraded to BrainScaleS2, with new processors developed in close collaboration with neuroscientists.

The new system will be able to better emulate learning and model chemical processes, such as the effects of dopamine on learning, that are not replicated in other neuromorphic systems. The researchers say it will also be able to model various kinds of neurons, dendrites, and ion channels, and features of structural plasticity such as the loss and growth of synapses. Maybe one day the system will even be able to approximate human learning and intelligence. “To understand biological intelligence is, I think, by far the biggest problem of the century,” says Johannes Schemmel, a biophysicist at Heidelberg University who helped develop BrainScaleS.

See “The Intelligence Puzzle,” The Scientist, November 2018

Current artificial intelligence systems still trail the brain when it comes to flexibility and learning ability. “Google’s networks became very good at recognizing images of cats once they were shown 10 million images of cats, but if you show my two-year-old grandson one cat he will recognize cats for the rest of his life,” says Furber.

With advances to Loihi set to roll out later this year, Eliasmith hopes to be able to add more high-level cognitive and learning behaviors to his Spaun model. He says he’s particularly excited to try to accurately model how humans can quickly and easily learn a cognitive task, such as a new board game. Famed AI gamers such as AlphaGo must model millions of games to learn how to play well.

It’s still unclear whether replicating human intelligence is just a matter of building larger and more detailed models of the brain. “We just don’t know if the way that we’re thinking about the brain is somehow fundamentally flawed,” says Eliasmith. “We won’t know how far we can get until we have better hardware that can run these things in real time with hundreds of millions of neurons,” he says. “That’s what I think neuromorphics will help us achieve.”

Sandeep Ravindran is a freelance science journalist living in New York City.

References

- C. Eliasmith et al., “A large-scale model of the functioning brain,” Science, 338:1202–05, 2012.

- D.S. Modha, R. Singh, “Network architecture of the long-distance pathways in the macaque brain,” PNAS, 107:13485–90, 2010.

- P.A. Merolla et al. “A million spiking-neuron integrated circuit with a scalable communication network and interface,” Science, 345:668–73, 2014.

- M. Davies et al. “Loihi: A neuromorphic manycore processor with on-chip learning,” IEEE Micro, 38:82–99, 2018.

- J. Schemmel et al., “A wafer-scale neuromorphic hardware system for large-scale neural modeling,” Proc 2010 IEEE Int Symp Circ Sys, 2010.

- S.B. Furber et al., “The SpiNNaker Project,” Proc IEEE, 102:652–65, 2014.