It has been almost 9 months

since I started my sabbatical work with the

Microsoft Azure Cosmos DB team.

I knew what I signed up for then, and I knew it was overwhelming.

It is hard not to get overwhelmed. Cosmos DB provides a global highly-available low-latency all-in-one database/storage/querying/analytics service to heavyweight demanding businesses. Cosmos DB is used ubiquitously within Microsoft systems/services, and is also one of the fastest-growing services used by Azure developers externally. It manages 100s of petabytes of indexed data, and serves 100s of trillions of requests every day from thousands of customers worldwide, and enables customers to build highly-responsive mission-critical applications.

But I underestimated how much there is to learn about, and how long it would be to develop a good sense of the big picture. By “developing a good sense of the big picture”, I mean learning/internalizing the territory myself, and, when looking at the terrain, being able to appreciate the excruciatingly tiny and laborious details in each square-inch as well.

Cosmos DB has many big teams working on many different parts/fronts at any point. I am still amazed by the activity going on simultaneously in so many different fronts. Testing, security, core development, resource governance, multitenancy, query and indexing, scale-out storage, scale-out computing, Azure integration, serverless functions, telemetry, customer support, devops, etc. Initially all I cared for was the core development front and the concurrency control protocols there. But overtime via diffusion and osmosis I came to learn, understand, and appreciate the challenges in other fronts as well.

It may sound weird to say this after 9 months, but I am still a beginner. Maybe I have been slow, but when you join a very large project, it is normal that you start working on something small, and on a parallel thread you gradually develop a sense of an emerging big picture through your peripheral vision. This may take many months, and in Microsoft big teams are aware of this, and give you your space as you bloom.

This is a lot like learning to drive. You start in the parking lots, in less crowded suburbs, and then as you get more skilled you go into the highway networks and get a fuller view of the territory. It is nice to explore, but you wouldn’t be able to do it until you develop your skills. Even when someone shows you the map view and tell you that these exists, you don’t fully realize and appreciate them. They are just concepts to you, and you have a very superficial familiarity with them until you start to explore them yourself.

As a result, things seem to move slowly if you are an engineer working on a small thing, say datacenter automatic failover component, in one part of the territory. Getting this component right may take months of your time. But it is OK. You are building a new street in one of the suburbs, and this adds up to the big picture. The big picture keeps growing at a steady rate, and the colors get more vibrant, even though individually you feel like you are progressing slowly.

“A thousand details add up to one impression.” -Cary Grant

“The implication is that few (if any) details are individually essential, while the details collectively are absolutely essential.” -McPhee

When I started in August, I had the suspicion that I needed to unpack this term: “cloud-native”.

(The term “cloud-native” is a loaded key term, and the team doesn’t use it lightly. I will try to unpack some of it here, and I will revisit this in my later posts.)

Again, I underestimated how long it would take me to get a better appreciation of this. After learning about the many fronts things are progressing, I was able to get a better understanding of how important and challenging this is.

So in the rest of the post, I will try to give an overview of things I learned about what a cloud-native distributed database service does. It is possible to write a separate blog post for each paragraph, and I hope to expand on each in the future.

What does a cloud-born distributed database look like?

On the quest to achieve cost-effective, reliable and assured, general-purpose and customizable/flexible operation, a globally distributed cloud database faces many challenges and mutually conflicting goals in different dimensions. But it is important to hit as high a mark in as many of the dimensions as possible.

While several databases hit one or two of these dimensions, hitting all these dimensions together is very challenging. For example, the scale challenge becomes much harder when the database needs to provide guaranteed performance at any point in the scale curve. Providing virtually unlimited storage becomes more challenging when the database also needs to meet stringent performance SLAs at any point. Finally, achieving these while providing tenant-isolation and managing resources to prevent any tenant from impacting the performance of others is extra challenging, but is required for providing and cost-efficient cloud database.

Trying to add support and realize substantial improvements for all of these dimensions does not work as an afterthought. Cosmos DB is able to hit a high mark in all these dimensions because it is designed from the ground up to meet all these challenges together. Cosmos DB provides a frictionless cloud-native distributed database service via:

- Global distribution (with guaranteed consistency and latency) by virtue of transparent multi-master replication,

- Elastic scalability of throughput and storage worldwide (with guaranteed SLAs) by virtue of horizontal partitioning, and

- Fine grained multi-tenancy by virtue of highly resource-governed system stack all the way from the database engine to the replication protocol.

To realize these, Cosmos DB uses a novel nested distributed replication protocol, robust scalability techniques, and well-designed resource governance abstractions, and I will try to introduce these next.

Scalability, Global Distribution, and Resource Governance

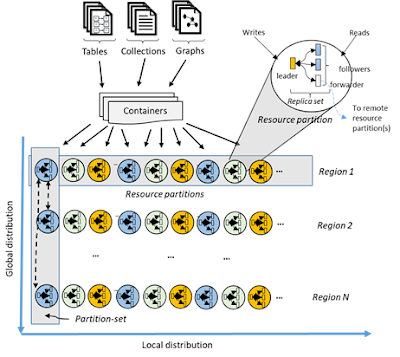

To achieve high-scalability and availability, Cosmos DB uses a novel protocol to replicate data across nodes and datacenters with minimal latency overheads and maximum throughput. For scalability, data is automatically sharded guided by storage and throughput triggers, and the shards are assigned to different partitions. Each partition is served by a multiple node replica-set in each region, with one node acting as a primary replica to handle all write operations and replication within the replicaset. Reading the data is carried out from a quorum of secondary replicas by the clients. (Soft-state scale out storage and computation implementations provide independent scaling for storage-heavy and computation-heavy workloads.)

Since the replica-set maintains multiple copies of the data, it can mask some replica failures in a region without sacrificing the availability even in the strong consistency mode. (While two simultaneous replica failure may be rare, it is more common to observe one replica failure while another replica is unavailable due to rolling upgrades, and masking two replica failures can help for smooth uninterrupted operation for the cloud database.) Each region contains an independent configuration manager to maintain system configuration and perform leader election for the partitions. Based on the membership changes, the replication protocol also reconfigures the size of read and write quorums.

In addition to the local replication inside a replica-set, there is also geo-replication which implements distribution across any number of Azure regions —50+ of them. Geo-replication is achieved by a nested consensus distribution protocol across the replica-sets in different regions. Cosmos DB provides multimaster active-active replication in order to allow reads and writes from any region. For most consistency models, when a write originates in some region, it becomes immediately available in that region while being sent to an arbiter (ARB) for ordering and conflict resolution. The ARB is a virtual process that can co-locate in any of the regions. It uses the distribution protocol to copy the data to primaries in each region, which then replicate the data in their respective regions. The Cosmos DB distribution and replication protocols are verified at the design level with TLA+ model checker and the implementations are further tested for consistency problems using (an MS Windows port of) Jepsen tests.

Cosmos DB is engineered from the ground up with resource governance mechanisms in order to provide an isolated provisioned throughput experience (backed up by SLAs), while achieving high density packing (where 100s of tenants share the same machine and 1000s share the same cluster). To this end, Cosmos DB define an abstract rate-based currency for throughput, called Request Unit or RU (plural, RUs) that provide a normalized model for accounting the resources consumed by a request, and charge the customers for throughput across various database operations consistently and in a hardware agnostic manner. Each RU combines a small share of CPU, memory and storage IOPS. Tenants in Cosmos DB control the desired performance they need from their containers by specifying the maximum throughput RUs for a given container. Viewed from the lens of resource governance, Cosmos DB is a massively distributed queuing system with cascaded stages of components, each carefully calibrated to deliver predictable throughput while operating within the allotted budget of system resources, guided by the principles of Little’s Law and Universal Scalability Law. The implementation of resource governance leverages on the backend partition-level capacity management and across-machine load balancing.

Contributions of Cosmos DB

By leveraging on the cloud-native datastore foundations above, Cosmos DB provides an almost “one size fits” database. Cosmos DB provides local access to data with low latency and high throughput, offers tunable consistency guarantees within and across datacenters, and provides a wide variety of data models and APIs leveraging powerful indexing and querying support. Since Cosmos DB supports a spectrum of consistency guarantees and works with a diverse set of backends, it resolves the integration problems companies face when multiple teams use different databases to meet different use-cases.

Vast majority of other data-stores provide either eventual, strong consistency, or both. In contrast, the spectrum of consistency guarantees Cosmos DB provides meets the consistency and availability needs of all enterprise applications, web applications, and mobile apps. Cosmos DB enables applications decide what is best for them and to make different consistency-availability tradeoffs. An eventually-consistent store allows diverging state for a period of time in exchange for more availability and lower latency and maybe suitable for relaxed consistency applications, such as recommendation systems or search engines. On the other hand, a shopping cart application may require a “read your own writes” property for correct operation. This is served by the session consistency guarantee at Cosmos DB. Extending this across many clients requires provides “read latest writes” semantics and requires strong consistency. This ensures coordination among many clients of the same application and makes the job of the application developer easy. The strong consistency is preserved both within a single region as well as across all associated regions. The bounded staleness consistency model guarantees any read request returns a value within the most recent k versions or t time. It offers global total order except within the staleness window, therefore it is a slightly weaker guarantee than the strong consistency.

Another way Cosmos DB manages to become an all-in-one database is by providing multiple APIs and serving different data models. Web and mobile applications need a spectrum of choices/alternatives for their data models: some applications need SQL, while others take advantage of simpler models, such as key-value or column-family, and yet some other applications require more specialized data models, such as document, key-value, column-family, and graph stores. Cosmos DB is designed to support APIs and data models by projecting the internal document store into different representations depending on the selected model. Clients can pick between document, SQL, Azure Table, Cassandra, and Gremlin graph APIs to interact with their datastore. This not only provides great flexibility for our clients, but also allows them to effortlessly migrate their applications over to Cosmos DB.

Finally, Cosmos DB provides the tightest SLAs in the industry. In contrast to many other databases that provide only availability SLAs, Cosmos DB also provides consistency SLAs, latency SLAs, and throughput SLAs. Cosmos DB guarantees the read operations to take under 10ms at the 99th percentile for a typical 1KB object. Write operations are slightly heavier and for most consistency levels complete within 15ms. The SLAs for throughput guarantee that the clients to receive the throughput equivalent to the resources provisioned to the account via RUs.