Amazon Aurora: on avoiding distributed consensus for I/Os, commits, and membership changes, Verbitski et al., SIGMOD’18

This is a follow-up to the paper we looked at earlier this week on the design of Amazon Aurora. I’m going to assume a level of background knowledge from that work and skip over the parts of this paper that recap those key points. What is new and interesting here are the details of how quorum membership changes are dealt with, the notion of heterogeneous quorum set members, and more detail on the use of consistency points and the redo log.

Changing quorum membership

Managing quorum failures is complex. Traditional mechanisms cause I/O stalls while membership is being changed.

As you may recall though, Aurora is designed for a world with a constant background level of failure. So once a quorum member is suspected faulty we don’t want to have to wait to see if it comes back, but nor do we want throw away the benefits of all the state already present on a node that might in fact come back quite quickly. Aurora’s membership change protocol is designed to support continued processing during the change, to tolerate additional failures while changing membership, and to allow member re-introduction if a suspected faulty member recovers.

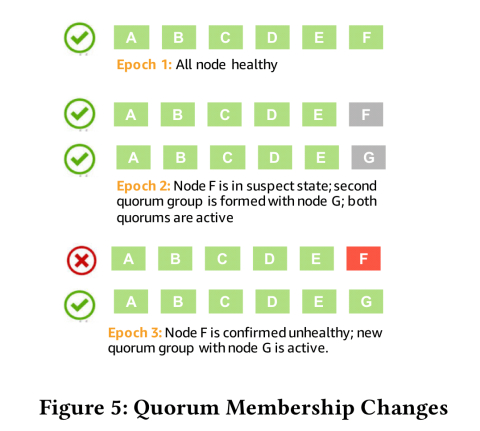

Each membership change is made via at least two transitions. Say we start out with a protection group with six segment members, A-F. A write quorum is any 4 of 6, and a read quorum is any 3 of 6. We miss some heartbeats for F and suspect it of being faulty.

Move one is to increment the membership epoch and introduce a new node G. All read and write requests, and any gossip messages, all carry the epoch number. Any request with a stale epoch number will be rejected. Making the epoch change requires a write quorum, just like any other write. The new membership epoch established through this process now requires a write set to be any four of ABCDEF and any four of ABCDEG. Notice that whether we ultimately choose to reinstate F, or we stick with G, we have valid quorums at all points under both paths. For a read set we need any 3 of ABCDEF or any 3 of ABCDEG.

You can probably see where this is going. If F comes back before G has finished completing hydrating from its peers, then we make a second membership transition back to the ABCDEF formation. If it doesn’t, we can make a transition to the ABCDEG formation.

Additional failures are handled in a similar manner. Suppose we’re in the transition state with a write quorum of (4/6 of ABCDEF) AND (4/6 of ABCDEG) and wouldn’t you know it, now there’s a problem with E! Meet H. We can transition to a write quorum that is (4/6 of ABCDEF and 4/6 ABCDEG) AND (4/6 of ABCDHF and 4/6 of ABCDHG). Note that even here, simply writing to ABCD fulfils all four conditions.

Heterogeneous quorums

Quorums are generally thought of as a collection of like members, grouped together to transparently handle failures. However, there is nothing in the quorum model to prevent unlike members with differing latency, cost, or durability characteristics.

Aurora exploits this to set up protection groups with three full segments, which store both redo log records and materialized data blocks, and three tail segments which store just the redo logs. Since data blocks typically take more space than redo logs the costs stay closer to traditional 3x replication than 6x.

With the split into full and tail segments a write quorum becomes any 4/6 segments, or all 3 full segments. (“In practice, we write log records to the same 4/6 quorum as we did previously. At least one of these log records arrives at a full segment and generates a data block“). A read quorum becomes 3/6 segments, to include at least one full segment. In practice though, data is read directly from a full segment avoiding the need for a quorum read – an optimisation we’ll look at next.

There are many options available once one moves to quorum sets of unlike members. One can combine local disks to reduce latency and remote disks for durability and availability. One can combine SSDs for performance and HDDs for cost. One can span quorums across regions to improve disaster recovery. There are numerous moving parts that one needs to get right, but the payoffs can be significant. For Aurora, the quorum set model described earlier lets us achieve storage prices comparable to low-cost alternatives, while providing high durability, availability, and performance.

Efficient reads

So we spent the previous paper and much of this one nodding along with read quorums that must overlap with write quorums, understanding the 4/6 and 3/6 requirements and so on, only to uncover the bombshell that Aurora doesn’t actually use read quorums in practice at all! What!? (Ok, it does use read quorums, but only during recovery).

The thing is there can be a lot of reads, and there’s an I/O amplification effect as a function of the size of the read quorum. Whereas with write amplification we’re sending compact redo log records, with reading we’re looking at full data blocks too. So Aurora avoids quorum reads.

Aurora does not do quorum reads. Through its bookkeeping of writes and consistency points, the database instance knows which segments have the last durable version of a data block and can request it directly from any of those segments… The database will usually issue a request to the segment with the lowest measured latency, but occasionally also query one of the others in parallel to ensure up to data read latency response times.

If a read is taking a long time, Aurora will issue a read to another storage node and go with whichever node returns first.

The bookkeeping that supports this is based on read views that maintain snapshot isolation using Multi-Version Concurrency Control (MVCC). When a transaction commits, the log sequence number (LSN) of its commit redo record is called the System Commit Number or SCN. When a read view is established we remember the SCN of the most recent commit, and the list of transactions active as of that LSN.

Data blocks seen by a read request must be at or after the read view LSN and back out any transactions either active as of that LSN or started after that LSN… Snapshot isolation is straightforward in a single-node database instance by having a transaction read the last durable version of a database block and apply undo to rollback any changes.

Storage consistency points

Aurora is able to avoid much of the work of consensus by recognizing that, during normal forward processing of a system, there are local oases of consistency. Using backward chaining of redo records, a storage node can tell if it is missing data and gossip with its peers to fill in gaps. Using the advancement of segment chains, a databased instance can determine whether it can advance durable points and reply to clients requesting commits. Coordination and consensus is rarely required….

Recall that the only writes which cross the network from the database instance to the storage node are log redo records. Redo log application code is run within the storage nodes to materialize blocks in the background or on-demand to satisfy read requests.

Log records form logical chains. Each log record stores the LSN of the previous log record in the volume, the previous LSN for the segment, and the previous LSN for the block being modified.

- The block chain is used to materialise individual blocks on demand

- The segment chain is used by each storage node to identify records it has not received and fill those holes via gossip

- The full log chain provides a fallback path to regenerate storage volume metadata in case of a “disastrous loss of metadata state.”

…all log writes, including those for commit redo log records, are sent asynchronously to storage nodes, processed asynchronously at the storage node, and asynchronously acknowledged back to the database instance.

Individual nodes advance a Segment Complete LSN (SCL), representing the latest point in time for which it has received all log records. The SCL is sent as part of a write acknowledgement. Once the database instance has seen the SCL advance at 4/6 members of a protection group, it advances the Protection Group Complete LSN (PGCL) – the point at which the protection group has made all writes durable. In the following figure for example, the PGCL for PG1 is 103 (because 105 has not met quorum), and the PGCL for PG2 is 104.

The databases advances a Volume Complete LSN (VCL) once there are no pending writes preventing PGCL advancing for one of its protection groups. No consensus is required to advance SCL, PGCL, or VCL, this can all be done via local bookkeeping.

This is possible because storage nodes do not have a vote in determining whether to accept a write, they must do so. Locking, transaction management, deadlocks, constraints, and other conditions that influence whether an operation may proceed are all resolved at the database tier.

Commits are acknowledged by the database once the VCL has advanced beyond the System Commit Number of the transaction’s commit redo log record.