Microservice architecture paradigm fits well within the Unix’s first principle/philosophy:

“Make one program do one thing well”.

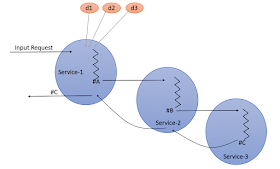

We love creating small services which has identity of its own (within a bounded context). These services have their own CI/CD pipelines and provides loose coupling b/w components which significantly improves our ability to deliver code to production. In reality, a workflow can span multiple services. Its very common to find scenarios like below:

In the above example, a workflow spans 3 services (Service-1, 2 and 3). Service1 receives the original request. In order to process this request, it GET(s) some data from its dependent services(d1/d2/d3). It further runs some business logic and produces an intermediate response #A. It then makes a state mutable call (POST/PUT/DELETE/PATCH) to Service-B using #A as input and waits for its response. Service-B follows the same paradigm and the workflow ends when all services returns.

The scenario above is probably the most common scenario you can find in microservice architecture. From a high level, there is nothing wrong with this approach. Ok lets talk about testing. Lets say my team owns Service 1 and I want to write Component Tests in which I should be able to test Service-1 in isolation. Confused as why I am talking about Component Tests and not Unit-Tests? If you are following my blog so far, you probably already know how Component Tests have replaced Unit Tests for all my microservice stacks. More details

here if interested.

All the testing tools and methodologies known to us follows the AAA paradigm which is the Arrange, Act and Assert. Arrange is mocking, Act is input and Assert are the assertions we want to make on the output. If a program fits in this paradigm, we know how to test it. For Service-1, what is the most important thing we should test? Is it the output #C? NO! #C is actually an response from downstream service. To test Service-1, we should be testing #A. Service-1’s most important responsibility is to produce correct #A for a given set of input and mocked dependencies. But how can I test it if this is not the output of Service-1? Going back to the AAA paradigm – I know how to test output of a program but in this case, #A is not the output but more of an intermediate state and probably has no correlation with the actual output #C. If I am asserting on #C then I am not really testing Service-1.

Lets see if Unix’s 2nd principle can help us here:

“Expect the output of every program to become input to another, as yet unknown, program”

Lets take this in action. Imagine I have thousands of directories and I want to find directories with name “gear” in it. I can write it like this:

ls -a | grep “gear”

I am composing a workflow by using two programs: ls to list all directories and the output is then fed into grep to find the”gear” directory that I am interested in. Does it sound similar to workflow we created using microservice above (composing workflow using small services that suppose to do one thing well)? Lets take a close look here. In this workflow, whose’s responsibility is it to pass the result of ls into grep? Is it ls’s responsibility? NO! ls’s responsibility is to produce the list of directory names as output which is the most important thing it performs. So who is passing data from ls to grep? Its the Pipe Command! To put things in context – imagine team-A owns ls program and team-B owns grep. The only way these teams can independently test their programs is if they returned the the most important thing the program is performing as OUTPUT. Then we know how to apply AAA paradigm to test it!

Now lets compare it with the above microservice workflow example. When we make Service-1 orchestrate the part of the workflow which is the mutating call it is making to Service-2, we make Service-1 less testable. We lost #A since its not the output and we cannot test this service in isolation and probably need to rely on integration tests.

Break functional and orchestration responsibilities for better testability: First, let me clarify what I mean by Functional services. In this context – Functional services means a service that can take an input request, it can further talk to other dependency services to only GET relevant data it needs in order to process this request. It should then run business logic and produce a result. It may use its own data store to get/put the result and finally it should return the result back to the caller. One thing it should not do (if you haven’t noticed already) is to try to orchestrate the parts of the workflow. If we want to make testable service, it has to return the output which is the most important thing it performs.

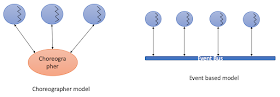

So the question is how to break the workflow so it is composed of mostly functional services? Simple move the responsibility of orchestrating the workflow into a different service – the Choreographer model or make it an Event based where service reads input from a queue and persist’s an output Event back to the queue.

What are the benefits:

- Functional services are much more testable in isolation which fits well within the Testing-Pyramid. We want to have heavy set of tests that can be run locally, in isolation and w/o any significant overhead.

- Orchestration services should be light on business logic which also makes them more testable.

- Keeps your integration test suite small which we know could be slow and unreliable. Helps us keep CD pipelines faster

- Personally, it gives me more confident that the overall workflow will work because individual services have been tested thoroughly.